In today's data-driven world, web scraping plays a crucial role in gathering information from various websites. Data ranging from contacts and emails to anything versatile like property details or product Information can be scrapped to build custom solutions

Simultaneously, web application development using frameworks like Django allows us to build robust and scalable applications. Integrating Scrapy; a powerful web scraping framework, with Django, can provide a seamless workflow for extracting and integrating data into web applications. Let’s explore the process of integrating Scrapy with Django.

Step 1: Create and activate the virtual environment

python3 -m venv myenv

source myenv/bin/activate

where myenv is the name of the virtual environment

Step 2: Install Django

You can install Django using the following command:

pip install django

Step 3: Create a Django Project

Run the following command to create a new Django project:

django-admin startproject project

Replace "project" with the desired name for your Django project.

Step 4: Create a new Django App

Navigate into the project directory using the command:

cd project

Run the following command to create a new Django app:

python3 manage.py startapp app

Replace "app" with the desired name for your app.

Step 5: Configure Django Settings

- Open the settings.py file located inside the project directory.

- In the INSTALLED_APPS list, add the name of your app (e.g., 'app') to include it in the project.

- Configure other project settings such as database connection, and static files, according to your requirements.

Step 6: Install Scrapy

To begin, ensure that Scrapy is installed in your Python environment. You can install it using the following command:

pip install scrapy

Step 7: Create a Scrapy Project

Navigate to the root folder of your Django project and create a Scrapy project. Run the following command:

scrapy startproject scraper

Replace "scraper" with the desired name for your Scrapy project.

Remove the top-level scraper directory so the structure is as follows:

- manage.py

- scrapy.cfg

- scraper

Step 8: Generate a Spider

You can start your first spider with the command:

cd scraper

scrapy genspider website_crawler domain

Replace "website_crawler" with the desired name for your spider, and "domain" with the target website's domain.

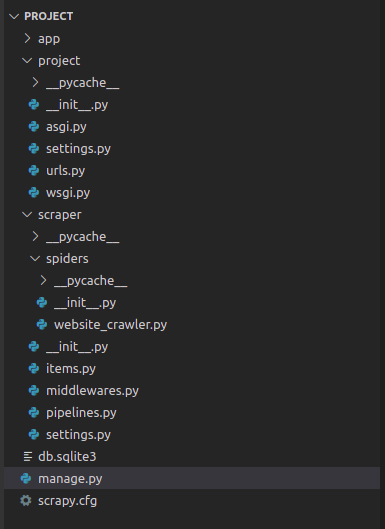

Our folder structure will somehow look like this:

As seen, project is the name of our django project, app is the name of our django app, scraper is the name of our scrapy project and website_crawler is the name of our spider.

Step 9: Connect Scrapy to Django

To access Django models from Scrapy, establish a connection between the two frameworks. Open the "settings.py" file within the "scraper" folder and add the following code snippet:

import os

import sys

import django

# Django Integration

sys.path.append(os.path.dirname(os.path.abspath(".")))

os.environ["DJANGO_SETTINGS_MODULE"] = "project.settings"

django.setup()

Step 10: Uncomment Pipeline Configuration:

To enable the pipeline for processing scraped items and interacting with Django models, uncomment the following lines in the "settings.py" file within the "scraper" folder:

ITEM_PIPELINES = {

"scraper.pipelines.ScraperPipeline": 300,

}

Step 11: Start the Spider

You can now run your Scrapy spider from the root directory of your django project with the following command:

scrapy crawl scraper

Replace "scraper" with the name of your Scrapy spider.

Following these steps will successfully integrate Scrapy with your Django project. Your Scrapy Spider can now access and interact with Django models, enabling seamless data extraction and integration into your Django application. Test it out and leave a comment about how it went for you! Happy Scraping!